ELK Stack

Hello Guys,

Today we are going to learn about ELK (Elasticsearch, Logstash, Kibana).

Introduction:

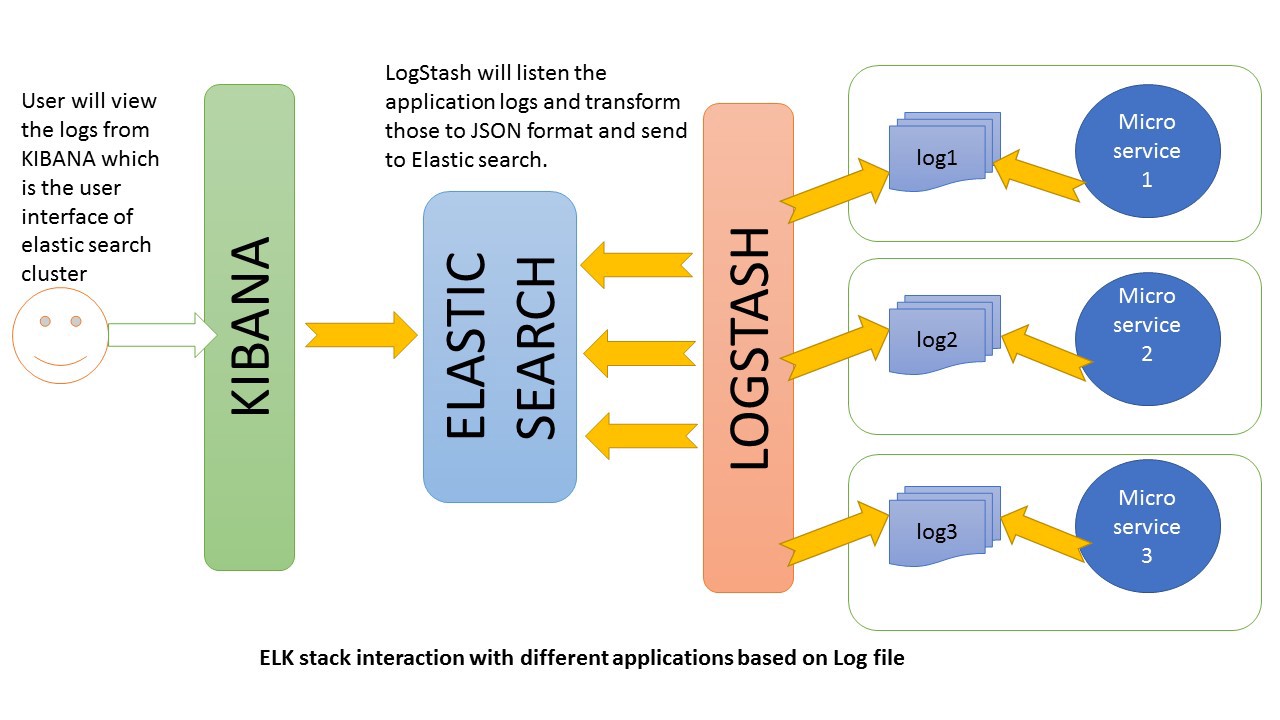

Our ELK stack setup has four main components:

Logstash : Logstash is a tool for receiving, processing and outputting logs. All kinds of logs. System logs, webserver logs, error logs, application logs and just about anything you can throw at it.

Elasticsearch: Stores all of the logs

Kibana: Web interface for searching and visualizing logs, which will be proxied through Nginx.

Filebeat : Installed on client servers that will send their logs to Logstash, Filebeat serves as a log shipping agent that utilizes the lumberjack networking protocol to communicate with Logstash.

In this blog, we will go over the installation of the Elasticsearch ELK Stack on Ubuntu 14.04—that is, Elasticsearch 2.2.x, Logstash 2.2.x, and Kibana 4.4.x. I will also show you how to configure it to gather and visualize the nginx and uwsgi logs of your systems in a centralized location, using Filebeat . Logstash is an open source tool for collecting, parsing, and storing logs for future use. Kibana is a web interface that can be used to search and view the logs that Logstash has indexed. Both of these tools are based on Elasticsearch, which is used for storing logs.

Centralized logging can be very useful when attempting to identify problems with your servers or applications, as it allows you to search through all of your logs in a single place. It is also useful because it allows you to identify issues that span multiple servers by correlating their logs during a specific time frame.

It is possible to use Logstash to gather logs of all types, but we will limit the scope of this tutorial to nginx and uwsgi logs .

Requirement

Min of two droplet (ubuntu 14.04)

Server1 : Install Elasticsearch, Logstash, Kibana , Nginx

Server2 : Install Filebeat

Installation

Let's get started on setting up our ELK Server! Elasticsearch and Logstash require Java, so we will install that now. We will install a recent version of Oracle Java 8 because that is what Elasticsearch recommends.

sudo add-apt-repository -y ppa:webupd8team/java

Update your apt package database:

sudo apt-get update

Install the latest stable version of Oracle Java 8 with this command (and accept the license agreement that pops up):

sudo apt-get -y install oracle-java8-installer

Now that Java 8 is installed, let’s install ElasticSearch.

Install Elasticsearch

Elasticsearch can be installed with a package manager by adding Elastic’s package source list.

Run the following command to import the Elasticsearch public GPG key into apt:

wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

If your prompt is just hanging there, it is probably waiting for your user’s password (to authorize the sudo command). If this is the case, enter your password.

Create the Elasticsearch source list:

echo "deb http://packages.elastic.co/elasticsearch/2.x/debian stable main" | sudo tee -a /etc/apt/sources.list.d/elasticsearch-2.x.list

Update your apt package database:

sudo apt-get update

Install Elasticsearch with this command:

sudo apt-get -y install elasticsearch

Elasticsearch is now installed.

sudo service elasticsearch restart

Then run the following command to start Elasticsearch on boot up:

sudo update-rc.d elasticsearch defaults 95 10

Now that Elasticsearch is up and running, let’s install Kibana.

Install Kibana

Kibana can be installed with a package manager by adding Elastic’s package source list.

Create the Kibana source list:

echo "deb http://packages.elastic.co/kibana/4.4/debian stable main" | sudo tee -a /etc/apt/sources.list.d/kibana-4.4.x.list

Update your apt package database:

Install Kibana with this command:

sudo apt-get -y install kibana

Kibana is now installed.

Now enable the Kibana service, and start it:

sudo update-rc.d kibana defaults 96 9

sudo service kibana start

Before we can use the Kibana web interface, we have to set up a reverse proxy. Let’s do that now, with Nginx.

Install Nginx

Because we configured Kibana to listen on localhost, we must set up a reverse proxy to allow external access to it. We will use Nginx for this purpose.

Use apt to install Nginx and Apache2-utils:

sudo apt-get install nginx apache2-utils Use htpasswd to create an admin user, that can access the Kibana web interface:

sudo htpasswd -c /etc/nginx/htpasswd.users admin

Enter a password at the prompt. Remember this login, as you will need it to access the Kibana web interface.

Now open the Nginx default server block in your favorite editor. We will use vi:

sudo vi /etc/nginx/sites-available/default Delete the file’s contents, and paste the following code block into the file. Be sure to update the server_name to match your server’s name:

In case you dont have one then remove the server_name parameter.

/etc/nginx/sites-available/default

server {

listen 80;

server_name example.com;

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/htpasswd.users;

location / {

proxy_pass http://localhost:5601;

proxy_redirect off;

}

}Save and exit. This configures Nginx to direct your server’s HTTP traffic to the Kibana application, which is listening on localhost:5601. Also, Nginx will use the htpasswd.users file, that we created earlier, and require basic authentication.

Now restart Nginx to put our changes into effect:

sudo service nginx restart

Kibana is now accessible via your FQDN or the public IP address of your ELK Server i.e. http://elk_server_public_ip/. If you go there in a web browser, after entering the “admin” credentials, you should see a Kibana welcome page which will ask you to configure an index pattern. Let’s get back to that later, after we install all of the other components.

Install Logstash

The Logstash package is available from the same repository as Elasticsearch, and we already installed that public key, so let’s create the Logstash source list:

echo 'deb http://packages.elastic.co/logstash/2.2/debian stable main' | sudo tee /etc/apt/sources.list.d/logstash-2.2.x.list

Update your apt package database:

sudo apt-get update

Install Logstash with this command:

sudo apt-get install logstash

Note: Logstash Version 5.X is available , so kindly install the latest version of logstash

Logstash is installed but it is not configured yet.

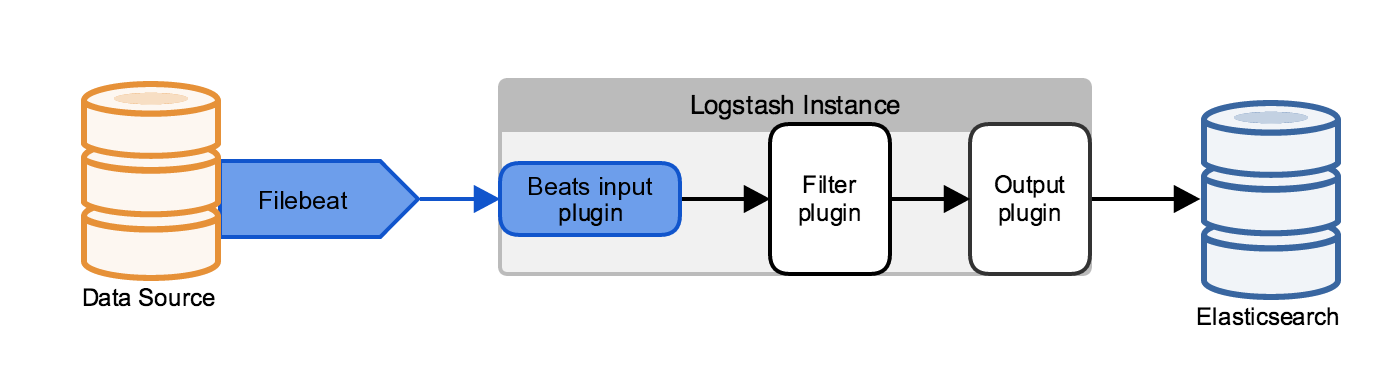

First, we will create a Logstash pipeline that uses Filebeat to take uwsgi and ngin web logs as input, parses those logs to create specific, named fields from the logs, and writes the parsed data to an Elasticsearch cluster.

Configuring Filebeat to send Log Lines to logstash

Before you create the Logstash pipeline, you’ll configure Filebeat on SERVER2 to send log lines to Logstash. The Filebeat client is a lightweight, resource-friendly tool that collects logs from files on the server and forwards these logs to your Logstash instance for processing. Filebeat is designed for reliability and low latency. Filebeat has a light resource footprint on the host machine, and the Beats input plugin minimizes the resource demands on the Logstash instance.

Install the latest filebeat ,after installing Filebeat, you need to configure it. Open the filebeat.yml file located in your Filebeat installation directory, and replace the contents with the following lines. Make sure paths points to the nginx or uwsgi log file.

filebeat:

prospectors:

-

paths:

- /var/log/nginx.log

input_type: log

document_type: nginx_server2

-

paths:

- /var/log/uwsgi.log

input_type: log

document_type: wsgi_server2

registry: /var/lib/filebeat/registry

output:

logstash:

hosts: ["<IP>:5044"]

logging:

to_files: true

files:

path: /var/log/filebeat

name: filebeat

rotateeverybytes: 10485760

level: errorHere, most of the things are self-explanatory , but we will look them out for the important ones.

document_type: is used by the logstash on server1 , to know from which server the particular log belongs and to process that particular log.

input_type: log Reads every line of the log file (default)

registry: The registry file stores the state and location information that Filebeat uses to track where it was last reading. If you want to start reading at the end of all files, you can set the tail_files option in the Filebeat configuration file to true. Also , if you want to reset your filebeat, then just remove the lines under the registry file, and restart filebeat

ignore_older:If this option is enabled, Filebeat ignores any files that were modified before the specified timespan. Configuring ignore_older can be especially useful if you keep log files for a long time. For example, if you want to start Filebeat, but only want to send the newest files and files from last week, you can configure this option.

Configuring Logstash for Filebeat Input

Next, we will create a Logstash configuration pipeline on SERVER1that uses the Beats input plugin(from SERVER2) to receive events from Beats.

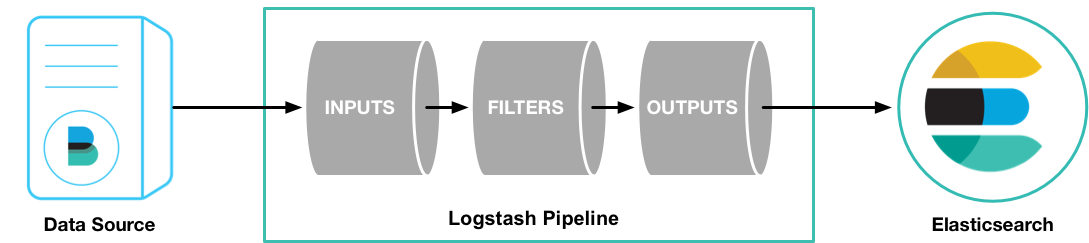

A Logstash pipeline has two required elements, input and output, and one optional element, filter. The input plugins consume data from a source, the filter plugins modify the data as you specify, and the output plugins write the data to a destination.

input{

beats {

port => 5044

}

}

filter

{

if [type] == "nginx_server2" or [type] == "nginx_server3"

{

grok {

match => { "message" => "%{IPORHOST:remote_addr} - %{USERNAME:remote_user} \[%{HTTPDATE:time_local}\] %{QS:request} %{INT:status} %{INT:body_bytes_sent} %{QS:http_referer} %{QS:http_user_agent}" }

}

date {

match => [ "time_local" , "dd/MMM/YYYY:HH:mm:ss Z" ]

}

}

if [type] == "wsgi_server2" or [type] == "wsgi_server3"

{

if ([message] =~ "\bgenerated\b" )

{

grok

{

match => { "message" => "\[pid: %{NUMBER}\|app: %{NUMBER}\|req: %{NUMBER}/%{NUMBER}\] %{IP} \(\) \{NUMBER} vars in %{NUMBER} bytes\} %{SYSLOG5424SD:DATE} %{WORD:method} %{URIPATHPARAM:end_point} \=\> generated %{NUMBER} bytes in %{NUMBER} msecs \(HTTP/%{NUMBER} %{NUMBER:status}\) %{NUMBER} headers in %{NUMBER}" }

}

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

}

else

{

drop{}

}

}

}

output {

if [type] == "nginx_server2"

{

elasticsearch{

index => "nginx_server2"

}

}

if [type] == "wsgi_server2"

{

elasticsearch{

index => "wsgi_server2"

}

}

}input: it recieves the logs via beat on port 5044,

filter: filters out the unwanted data , one of the most important thing here is grook pattern , that needs to be carefully structured to get the desired output, we will look for the grok pattern in detail later

output: after filtering the logs , output block sends the logs to particular index of elasticsearch on the basis of its document_type.

Grok Filtern Plugin

The grok filter plugin is one of several plugins that are available by default in Logstash.

The grok filter plugin enables you to parse the unstructured log data into something structured and queryable.

Because the grok filter plugin looks for patterns in the incoming log data, configuring the plugin requires you to make decisions about how to identify the patterns that are of interest to your use case. A representative line from the web server log(uwsgi) sample looks like this:

[pid: 14816|app: 0|req: 70253/563621] 27.63.136.255 () {72 vars in 1366 bytes} [Wed Oct 5 12:50:51 2016] GET /api/v1/core/capturedata/?cid=9e92c1d&tag_id=49255c39 => generated 42 bytes in 1 msecs (HTTP/1.1 200) 3 headers in 96 bytes (1 switches on core 0)to parse such a log file we use the following grok pattern:

"\[pid: %{NUMBER}\|app: %{NUMBER}\|req: %{NUMBER}/%{NUMBER}\] %{IP} \(\) \{NUMBER} vars in %{NUMBER} bytes\} %{SYSLOG5424SD:DATE} %{WORD:method} %{URIPATHPARAM:end_point} \=\> generated %{NUMBER} bytes in %{NUMBER} msecs \(HTTP/%{NUMBER} %{NUMBER:status}\) %{NUMBER} headers in %{NUMBER}"The following Grok pattern will genetrate the o/p:

{

"_index": "wsgi_server2",

"_type": "wsgi_server2",

"_id": "AVhDZQdGnue98hvXgj3j",

"_version": 1,

"_score": 1,

"_source": {

"message": "[pid: 14816|app: 0|req: 70253/563621] 27.63.136.255 () {72 vars in 1366 bytes} [Wed Oct 5 12:50:51 2016] GET /api/v1/core/capturedata/?cid=9e92c1d39ed5&tag_id=49255c39818 => generated 42 bytes in 1 msecs (HTTP/1.1 200) 3 headers in 96 bytes (1 switches on core 0)",

"@version": "1",

"@timestamp": "2016-11-08T10:03:37.478Z",

"beat": {

"hostname": "datacap03",

"name": "datacap03"

},

"source": "/var/log/uwsgi.log",

"offset": 174538455,

"type": "wsgi_server2",

"input_type": "log",

"host": "server2",

"tags": [

"beats_input_codec_plain_applied"

],

"DATE": "[Wed Oct 5 12:50:51 2016]",

"method": "GET",

"end_point": "/api/v1/core/capturedata/?",

"status": "200"

}

}You can visualize logs on kibana as well This is all for ELK stack , for deployment script(via ansible) or source code follow: Source Code

Happy coding :)